Animating Urban Nature

Project Description

Ecomimesis is a site-specific, virtual reality environment containing animated virtual plants that allows viewers to have an intimate encounter with plants in an accelerated life cycle. The project is designed to be customized for the venue in which it is shown, so the architecture of the space is represented within the animation. The species featured is Conyza canadensis, a common urban ‘weed’ chosen for its prevalence in human-crafted landscapes that is, at the same time, invisible to most people as they choose to ignore such so-called nuisance species. Ecomimesis was inspired by Charles Darwin’s The Power of Movement in Plants, in which he documented his observations of how plants move as they grow. The project’s title is borrowed from philosopher Timothy Morton and signifies an acknowledgment of human entanglement within nature.

Significance

Recent research in the field of plant neurobiology has led to inquiries into the evolutionary purposes of the many ways that plants sense their surroundings, including through analogs to sight, hearing, touch, taste, and smell as well as through perception of electrical, magnetic, and chemical input. [Cazabon] But, plants move and react at speeds below human perception.

This project brings the plants that are in the backdrop of our life forward, pushing the observer to be a part of their growth. The featured species is “Conyza canadensis”, colloquially known as horseweed. Despite being a complex plant, this “weed” is often seen as a nuisance for its prevalence in urban spaces. Plantelligence emerged from my interest in how plants perceive and respond to events occurring in their surrounding environment, as a means to bring attention to how global warming impacts the way plants and in turn urban landscapes are currently evolving.

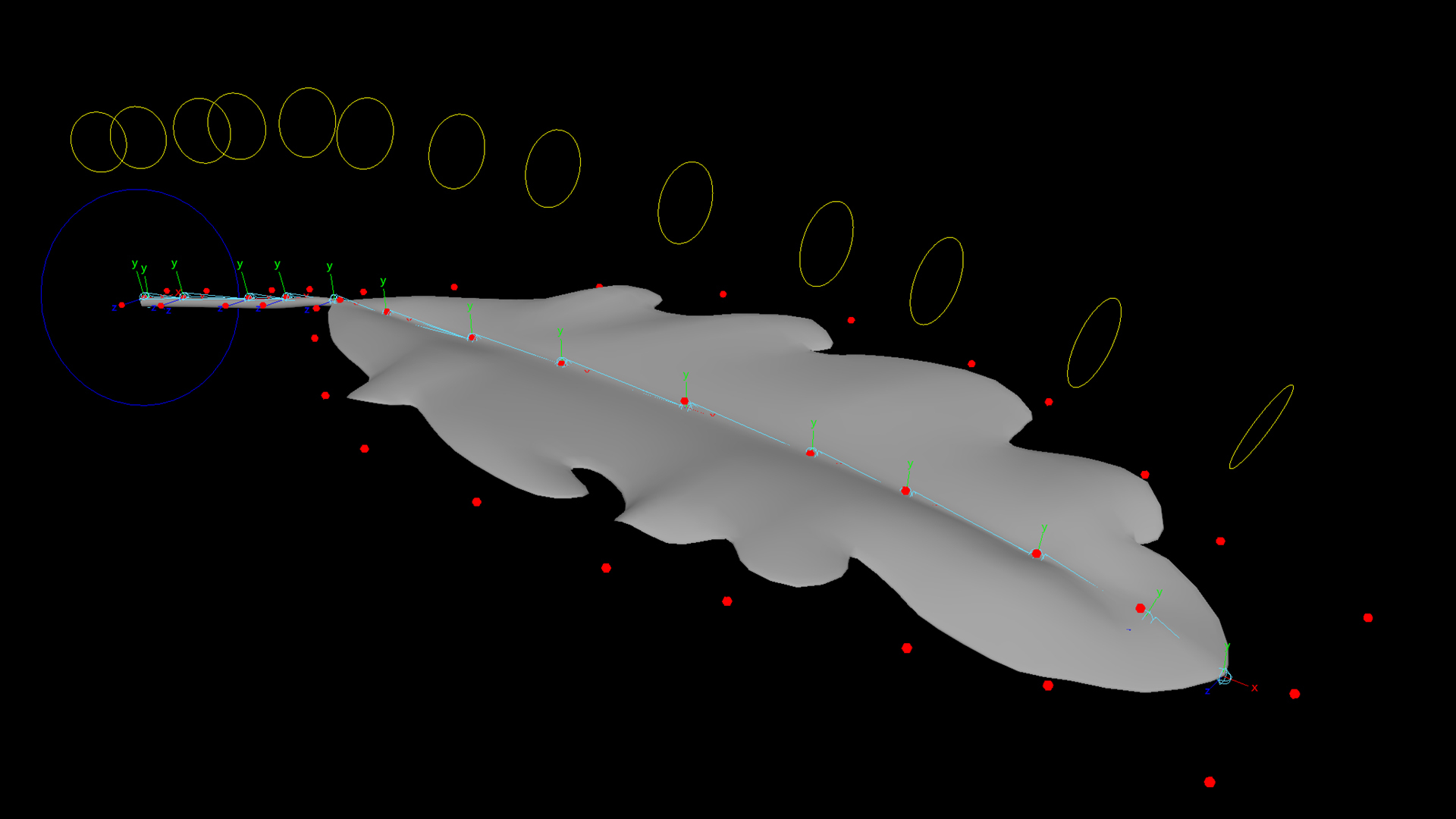

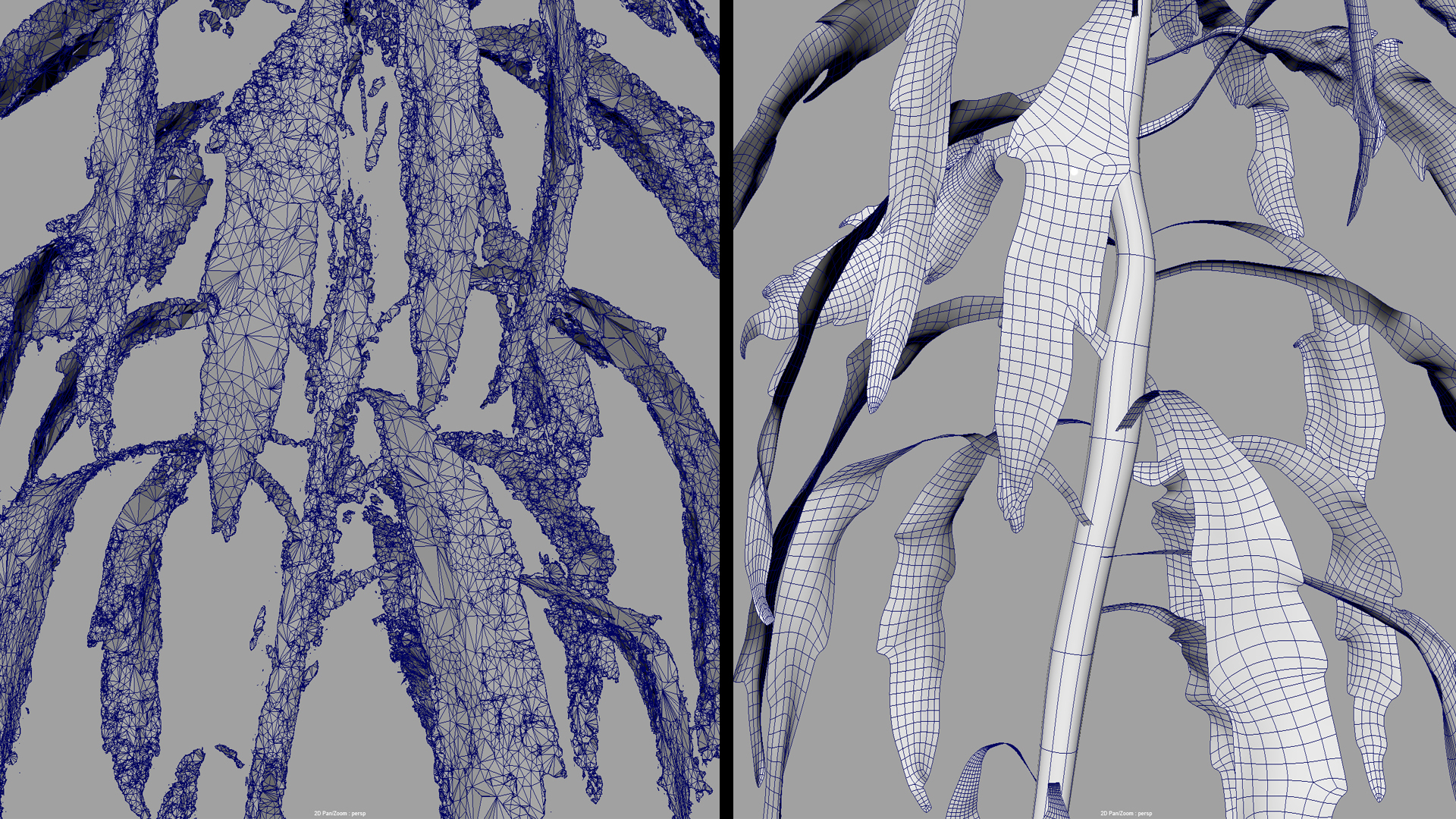

The IRC’s contributed photogrammetry, 3D modeling, animation, and the virtual reality aspects of the project. Plantelligence first took shape in the photogrammetry rig, scanning growing horseweed every 30 minutes for two months. This approach failed due to the sheer size of the scan files, which would take 3-4 days to process for each scan. Furthermore the models attained by this process would require many hours of cleaning to be usable.

The plants appear at randomly generated locations and at varying points in their growth cycles. As one plant dies, another emerges.

Instead of working with multiple scans, Ryan Zuber cleaned up one model plant, imposing quadrangular polygons on its surface, which allowed the model to be textured and animated.

The project is designed to be customized for the venue in which it is shown so the architecture of the space is represented within the animation.

Funding + Outcomes

In 2018, Cazabon displayed an updated version of this project in the exhibition “Hustle: Exploring the Many Different Definitions of Survival and Success”, at the Science Lab Gallery Detroit. An initiative of Michigan State University, the Science Lab Gallery Detroit is part of a network of international galleries featuring projects that blend art, science, and technology aimed at reaching young audiences. The animation was customized for the Hustle exhibition with the Oculus headset viewers saw over a dozen plants emerging in a virtual version of the space in which they are standing. Ecomimesis was also shown at two adjacent stations in the gallery.

Researchers and Creators

Project Director: Lynn Cazabon

IRC Technical Director – Modeling: Ryan Zuber

IRC Technical Director – Programming: Mark Jarzynski

Photogrammetry and Programming: Mark Murnane

Imaging Research Center, UMBC © 2024