Getting Real

Computer Graphics Researchers Marc Olano and Mark Jarzynski Evaluate Methods of Generating Random to Achieve Realism

People like to recreate the world as they see it. They did it in cave paintings tens of thousands of years ago, and we do it now. You can tell a lot about a culture by the way they depict the world. In Western Civilization there has been a particularly notable interest in creating images and sculptural objects that show people, places, and things as they might be recorded by a cameraóphotorealistically. This reflects cultural themes of objectivity and empiricism. Of course, artists tried to do this long before there were cameras. We see compelling examples in the ways the ancient Greeks rendered the human body in stone. The European Renaissance saw significant advances as well, establishing a perspective system for rendering a three-dimensional world on a two-dimensional surface, capturing the effects of light on form, and rendering human anatomy learned through dissection (post-mortem, we hope). Realism and naturalism are still visual values pursued doggedly in the production of movies, television, and video games. Many of us marvel at our astounding capacity to render impossible things and events as if they were real down to the last detail, and this continues to represent a compelling challenge.

In the past half century, computing technologies and practices have provided a new way to create realism using computer-generated graphic imagery (CGI). Not long ago, this required an extraordinary level of computing power. If the goal was to create motion graphics for a film, footage had to be rendered, one frame of film at a time requiring hours or days. Given that each second of film or television requires 24-30 frames to produce the illusion of smooth motion, that’s a lot of frames. Now, this can even be done convincingly on a smartphone, and we have specialized hardware in our computers, called graphic processing units (GPUs) that specialize in rendering these frames quickly. In computer/video games, we expect to render each frame of a moving CGI image as the user needs it, and then discard it once it’s seen. This is called real-time rendering and we want to be able to do it even when we are creating the highest fidelity of realism. Further, we want to create an illusion of movement that is much smoother than ever before. Virtual reality (VR) environments, where video gamers perceive themselves as being immersed in fictional environments created entirely with CGI, require three to four times as many frames per second as film and television: 90 frames per second (fps) or faster. Lower frame rates lead to motion sickness. This has led to interesting challenges in which the Imaging Research Center has become involved.

One such challenge centers on the fact that much of the world looks the way it does because of randomness. Waves on the ocean, grains of sand, clouds in the sky, and trees in the forestóare all formed through forces we may understand, but the actual results large each wave or grain of sand is and how they are arranged or patterned, within a certain possible range, results from how the forces that determine them intersect at a given time and place. What makes the result random is that any one outcome is as likely as any other.

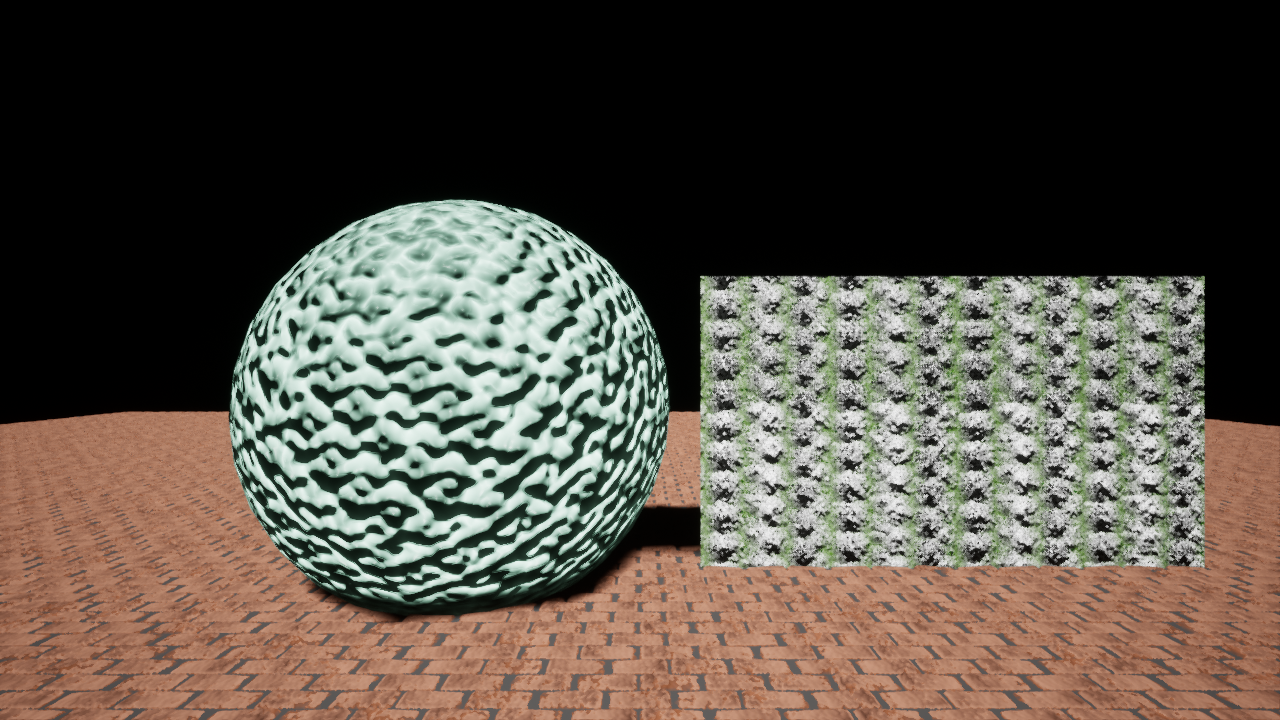

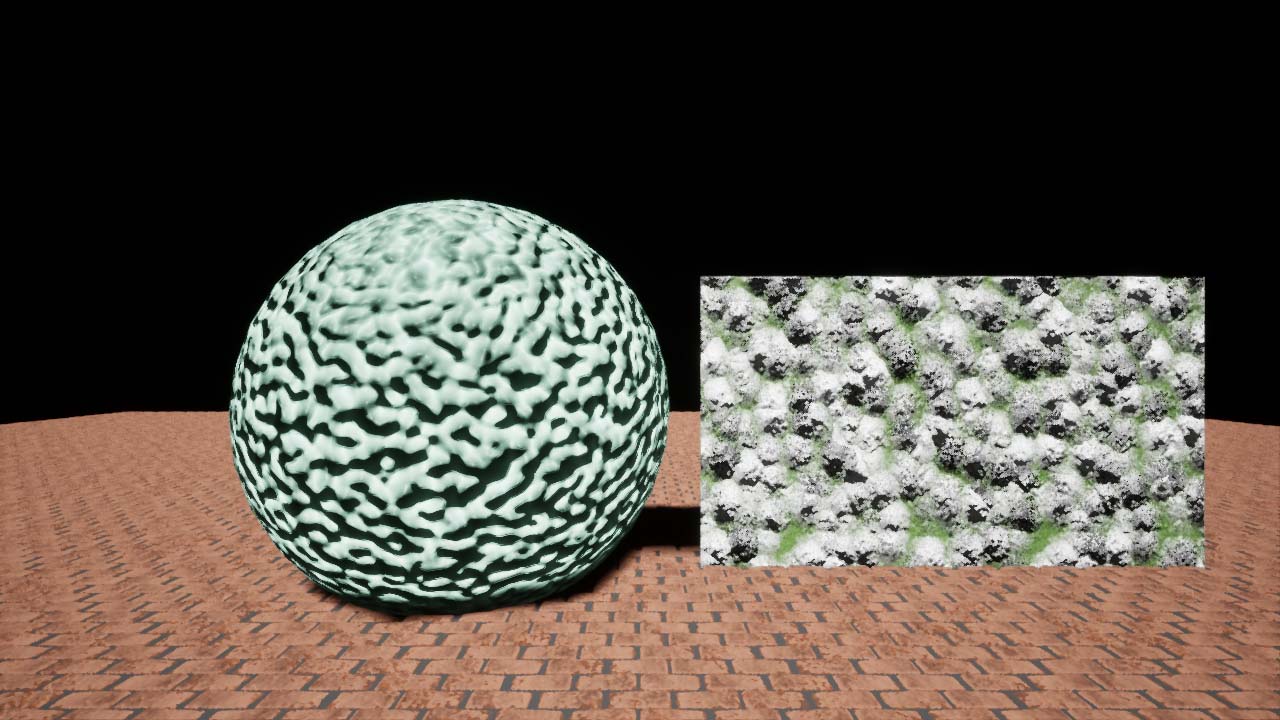

The large photograph above, by Kendall Hoopes, offers numerous examples of random work in nature. Such examples are everywhere and we take them for granted. While nature seems to produce random effortlessly, creating aspects of a world that appear random using computer graphics is not. When it doesn’t work, a person can see a repeating set of results that forms a pattern, shattering the realism. While that might not quite be true in nature, the convincing reality seen in the photograph above, of a real-world location, would surely be compromised if one noticed a repeating pattern in the clouds, mountains, or dirt. That would be a good time to go inside for a nap. In practice, success is likely to require a computing system generating a veritable firehose of numbers, in response to multiple inputs, where each number has an equal probability of being generated, and doing it extremely fast. Those numbers are then used to fill in variables such as shape, form, size, color, opacity, location…etc. The goal is to have no repeating patterns or at least none that are perceptible.

IRC Technical Director, Mark Jarzynski collaborated with Associate Professor of Computer Science and Electrical Engineering (CSEE) and Affiliate Professor in the IRC, Marc Olano, in research to evaluate numerous current and evolving methods of creating random numbers in this way. Mr. Jarzynski is pursuing his PhD in UMBC’s CSEE department, and so this opportunity to work on this interesting challenge with Dr. Olano, a computer scientist with extensive experience working with GPUs in computer games, was a very valuable learning experience. They wanted to compare the quality of the results (just how random they are) with the computational effort required to create them. This would allow those creating computer graphics for things that should appear random to choose the appropriate algorithm, or random hash function, for a given situation. For example, the quality doesn’t need to be perfect and speed is of the essence, or vice versa, this would help a graphic artist or graphics programmer choose the best hash function for that situation.

This research identifies the most useful of these random hash functions as the ones on the Pareto frontier, the ones that are the fastest for their quality or the highest quality for their speed. This project showed that most of the random hash functions used today are not on the Pareto frontier, so there is another significantly faster one that would be just as good.

You can find Mark and Marc’s journal article, Hash Functions for GPU Rendering, published in the Journal of Computer Graphic Techniques.

Mark Jarzynski has also presented this research at I3D, a conference for real-time 3D computer graphics and human interaction. You can watch this presentation on I3D’s YouTube channel.

You can do a visual comparison of the hash functions via this ShaderToy.

Researchers and Creators

Associate Professor of Computer Science and Electrical Engineering, Affiliate Professor in the IRC, Marc Olano

IRC Technical Director and Computer Science and Electrical Engineering PhD Student, Mark Jarzynski

Sponsor

Epic Games

Imaging Research Center, UMBC © 2024