Research on food choices and simulated environments

Project Description

“Virtual Buffet” is a virtual reality [VR] space created as a research tool by the IRC, Dr. Charissa Cheah, Professor of Psychology, and Dr. Jiaqi Gong, Assistant Professor of Information Systems. The project is a model of a dining hall in VR to research food choices in the virtual vs. real world.

Significance

During development, the team was able to observe the food choices and habits of participants with complete control over variables and confirm the accuracy of the information collected within a VR space. Therefore, this project can be replicated and expanded to answer significant questions that utilizes simulations for studying the mechanisms of influencing behaviors.

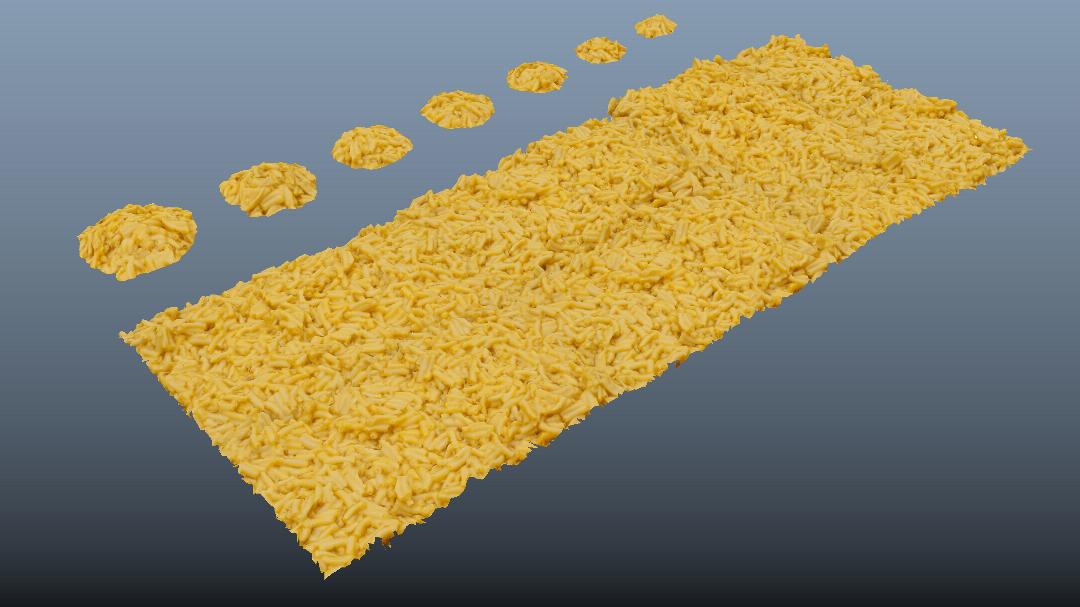

The resulting VR space is an attractive and convincing replica of the original. Subjects pick up a plate and then can choose from up to seven different portion sizes of foods such as carrots, mashed potatoes, salmon, pizza, and desserts. The drink dispenser fills glasses with water or soda. When a subject has made his or her selections, they set their plate down by the cash register, the computer reads the volume of each item and calculates the total calorie count for the plate.

Collaboration + Methods

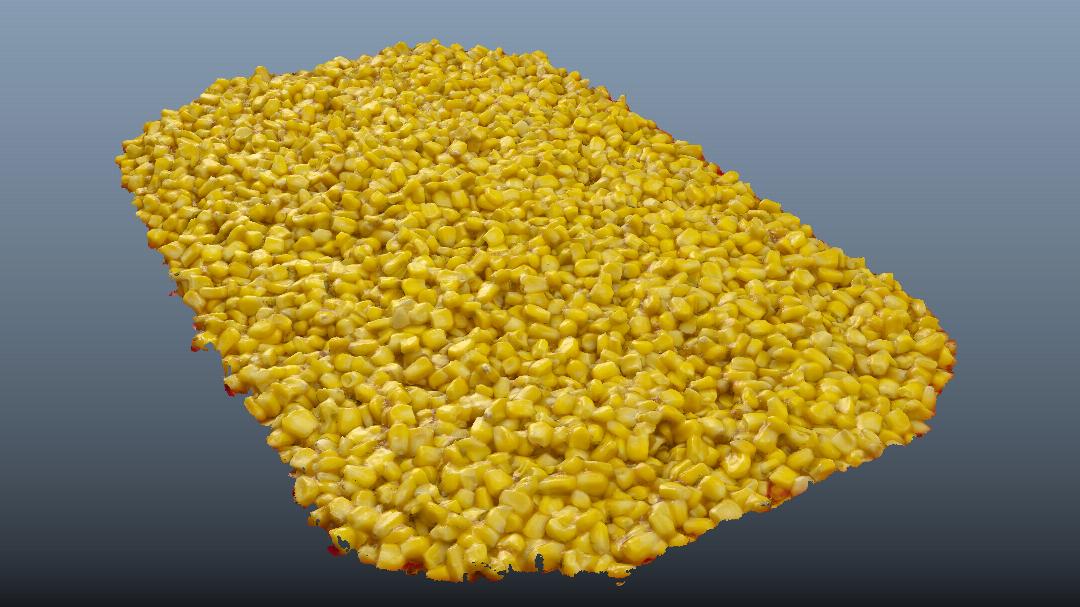

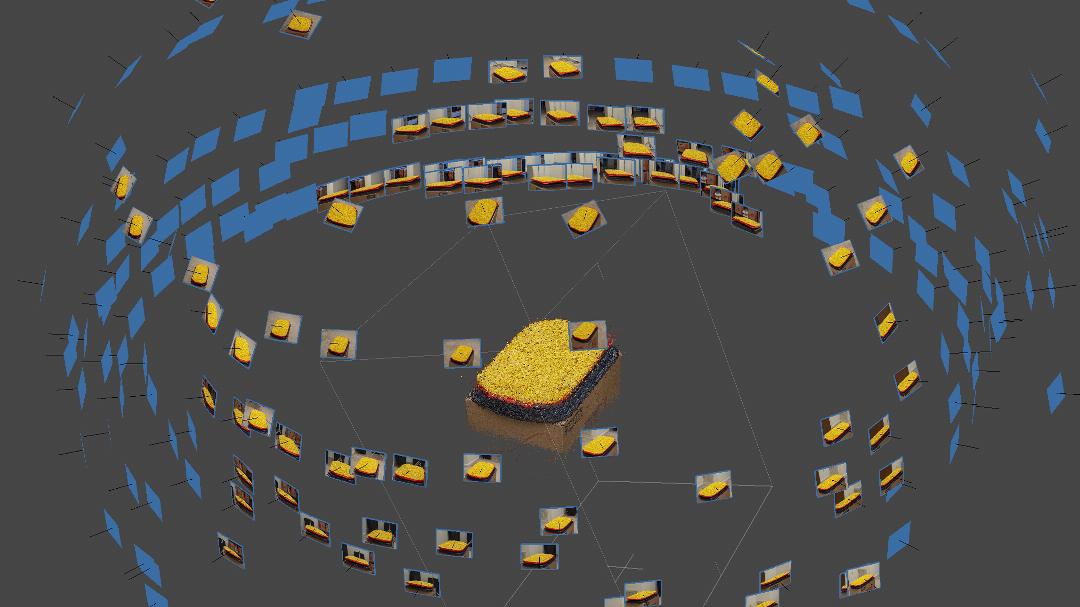

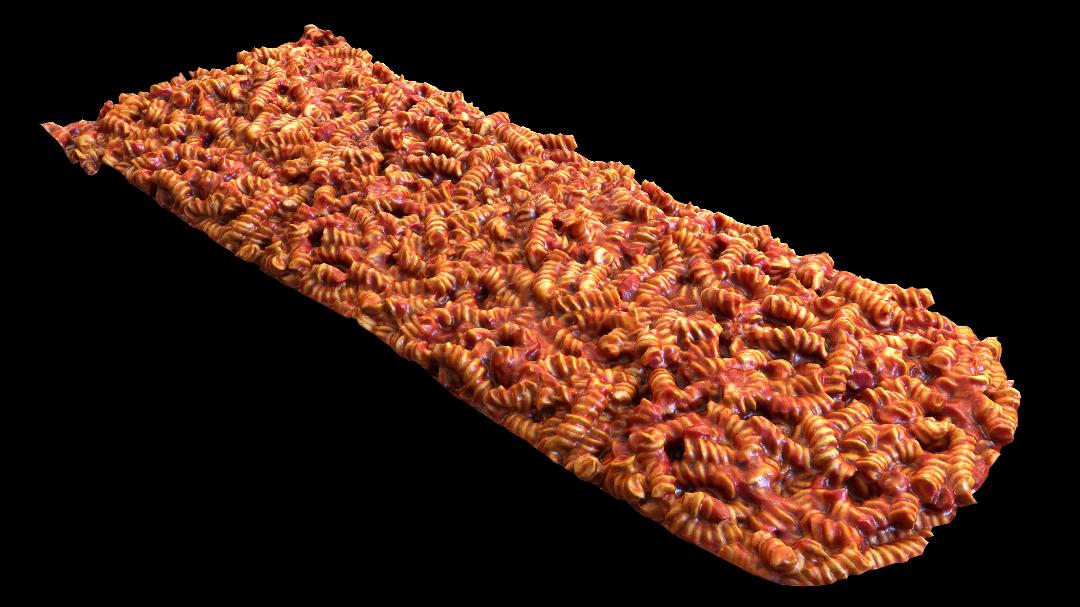

The IRC built the virtual buffet based on UMBC’s student dining hall (True Grit’s). To model the dining hall, buffet tables, food, and accessories, a variety of tools were used by IRC artists and engineers. Objects and food items were modeled using Photogrammetry,

which uses computation to calculate 96 simultaneous photographs taken from different perspectives. The final models were placed in a 3D VR environment as a virtual buffet, using the IRC’s OMMRL (Observation and Measurement-Enabled Mixed Reality Lab) in the UnReal™ Engine.

The interactions with the buffet in real life (RL) and in VR were tracked and recorded using multiple devices, such as motion sensors, cameras, and biometric sensors that collected various physiological data. Final analysis confirmed that the food choices made in the virtual buffet correlate significantly with those in authentic food buffets.

Researchers and Creators

- Nutrition Consultant: Travis D. Masterson, Dartmouth University

- Project Directors: Charissa Cheah, Professor of Psychology, Jiaqi Gong, Assistant Professor of Information Systems

- Lead Animator/Modeler: Ryan Zuber

- Animation/Modeling Interns: Ali Everitt, Shannon Irwin, Tomas Loayza, Tory Van Dine, Teng Yang

- Lead Programmer: Mark Jarzynski

- Programmer: Caitlin Koenig

Students

- Graduate Assistants from the Sensor-Accelerated Intelligent Learning Lab: Salih Jinnuo Jia, Stephen Kaputsos, Varun Mandalapu, Munshi Mahbubur Rahman, Claire Tran

- Graduate Assistants from the Culture, Childhood, and Adolescent Development Lab: Salih Barman, Hyun Su Cho, Sarah Jung, Tali Rasooly, Kathy Vu

- Matt Wong, ’21 Computer Science, UMCP

Related Contract Work

- Principal Investigators: Susan Perskey, Associate Investigator and Head of the Immersive Virtual Environment Testing Unit (IVETA), SBRB, NHGRI, NIH

- Project Coordinator: Lee Boot, Director of the Imaging Research Center

- Co-Investigators: Ryan Zuber, IRC Technical Director for Modeling and Animation, Chris Fortney, Lab Director, IVETA

- Sponsorship: National Human Genome Research Institute (NHGRI), NIH

Imaging Research Center, UMBC © 2024